When performing a web app, mobile app, or API penetration test, many companies, including Raxis, refer to the OWASP Top 10. Here we’ll discuss what that means and why it’s helpful.

A History

The OWASP (Open Worldwide Application Security Project) Foundation is a non-profit organization with chapters worldwide. OWASP formed in 2001, and the first OWASP Top 10 was released in 2003.

While OWASP is best known for their Top 10 list, they have several other projects as well and host conferences around the world encouraging people interested in application development and cybersecurity to come together. All of their tools, documentation, and projects are free and open to all who are interested in improving application security.

OWASP states their vision as “No more insecure software,” and all of their efforts aid in that goal.

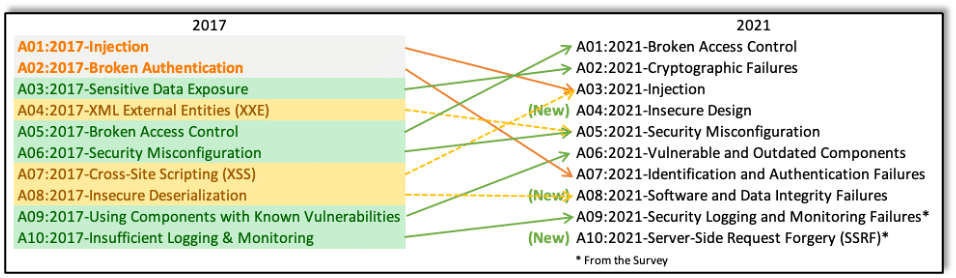

OWASP released their first Top 10 list in 2003 and continued with a new list every three years for about a decade. Updates have become less less frequent as web application security has matured. The most recent Top 10 list was released in 2021 and before that in 2017.

More Than Just a Web App Top 10 List

While the OWASP Top 10 list focuses mostly on web applications (and sometimes mobile applications, which share a number of similarities with web applications), in the last five years, OWASP has begun releasing a Top 10 list for APIs as well. An API (Application Programming Interface) contains functions that allow internal or external applications to contact the system for viewing and sometimes editing data. As such, APIs have a number of differences from web and mobile applications when it comes to keeping systems secure.

The OWASP API Security Top Ten was first released in 2019 with an update every other year, the most current in 2023. While there is some overlap with the application Top 10 list, this list focuses solely on APIs.

Updates to the Top 10 Lists

Updates to the Top 10 lists often combine vulnerabilities as cybersecurity professionals come to realize the items are related and best corrected in the same ways. As applications become more complex, OWASP has added new items to their lists as well.

Why a Top 10 List?

Knowing that application developers often have a lot of goals and limited time, and keeping with their vision of “no more insecure software,” OWASP releases its Top 10 list to give developers a succinct guide to follow within their SDLC (Software Development Lifecycle) process.

The items in the list are broad ideas that can be used in all parts of the planning, coding, and testing phases for applications. This encourages developers to build in security features early in the design phase as well as to find and add security measures later.

From a penetration testing perspective, the OWASP Top 10 list is also helpful. While malicious hackers have all the time in the world and don’t care if they crash systems and servers, penetration testers aim to discover as much as possible within an affordable time-boxed test without causing harm to an organization’s systems.

The OWASP Top 10 allows penetration testers to prioritize testing using agreed upon standards. This way customers receive the information needed to secure vulnerabilities in their applications and the knowledge that the controls they have in place are working correctly or need to be corrected. This doesn’t mean that penetration testers don’t examine other findings, but, within a time-boxed test, penetration testers attempt to focus first on the most critical risks.

How OWASP Creates the Top 10 List

On their website, OWASP explains their methodology for creating the OWASP Top 10 list, with eight categories from contributed data and two from a community survey. Contributed data is based on past vulnerabilities, while the survey aims to bring in new risks that may not have been fleshed out entirely in the cybersecurity world yet but that appear to be on the horizon and becoming key exposures.

The team also prioritized root causes over symptoms on this newest Top 10 list. The team has extensive discussions about all of the gathered CWEs in order to rank them by Exploitability, Detectability, and Technical impact. The move from CVSSv2 to CVSSv3 also played a role, as there have been multiple improvements in CVSSv3, but it takes time to convert CWEs to the new framework.

Source: https://owasp.org/www-project-top-ten/

A Look at the 2021 OWASP Top 10

The most recent Top 10 list, released in 2021, added three new categories, merged a few categories, and changed the priority of several categories. Though some risks were merged, no risks were entirely removed. Here’s a look at the current OWASP Top 10 Risks (Source: https://owasp.org/www-project-top-ten):

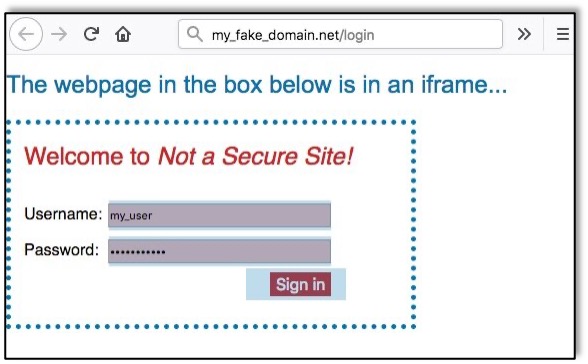

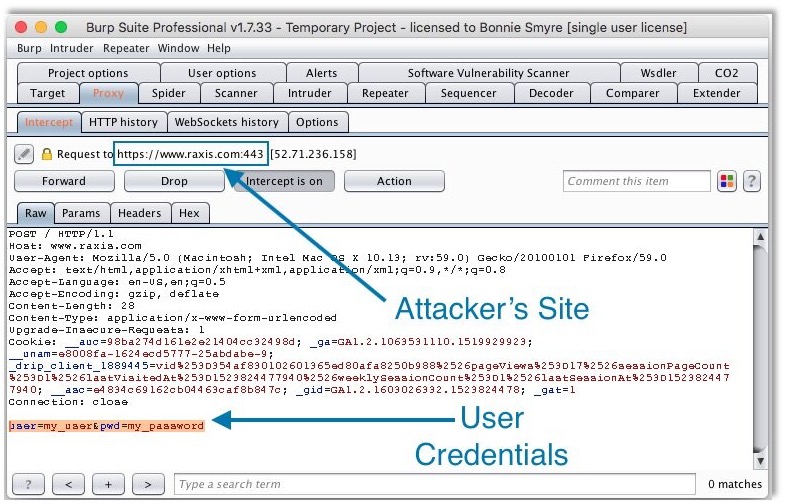

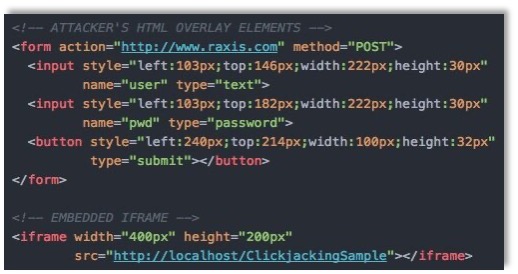

A01:2021 Broken Access Control moves up from the fifth position; 94% of applications were tested for some form of broken access control. The 34 Common Weakness Enumerations (CWEs) mapped to Broken Access Control had more occurrences in applications than any other category. Raxis published a blog about A01:2021 just after the Top 10 list was released that year. Take a look at OWASP TOP 10: Broken Access Control for more details.

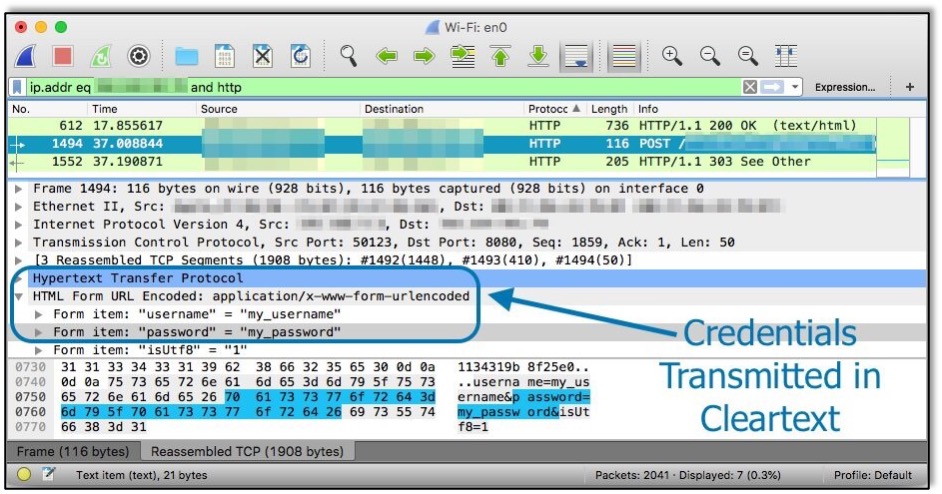

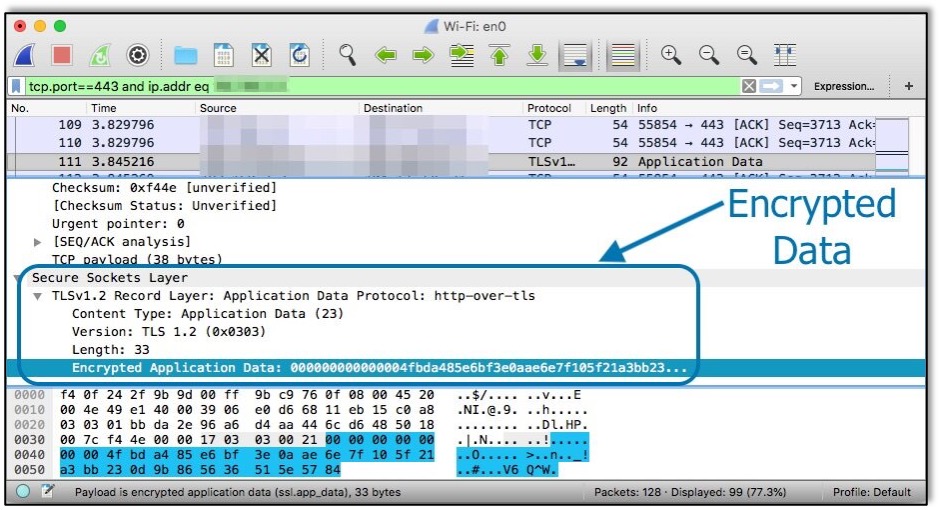

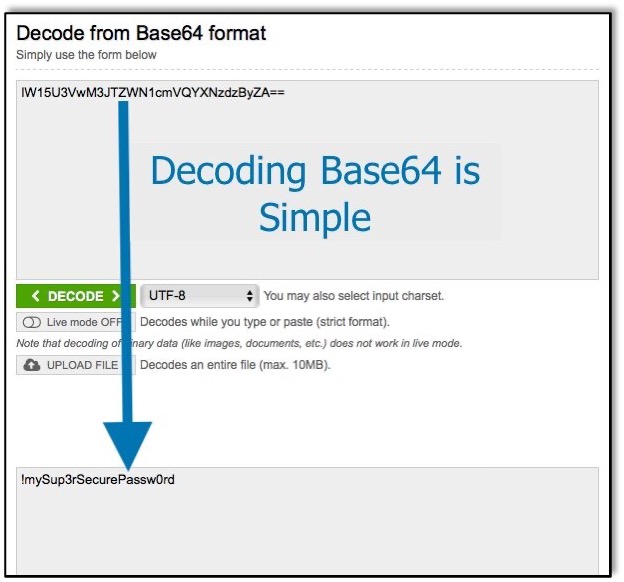

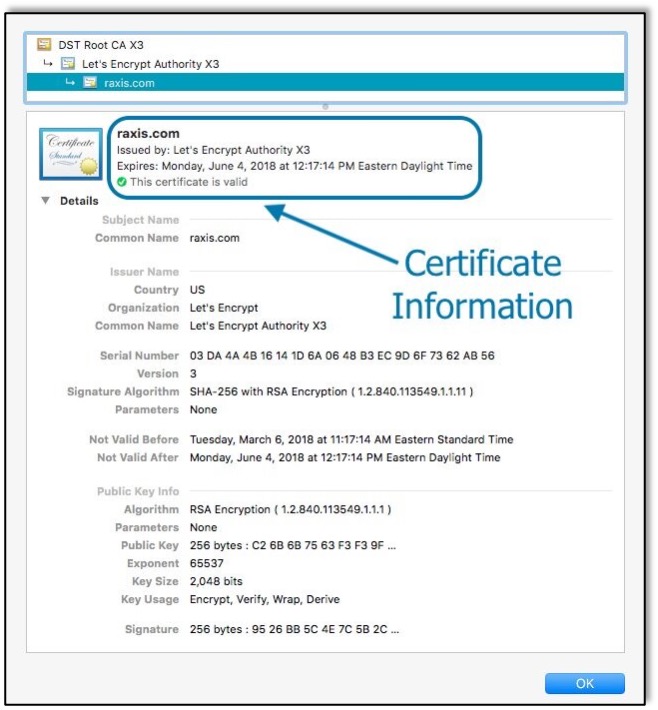

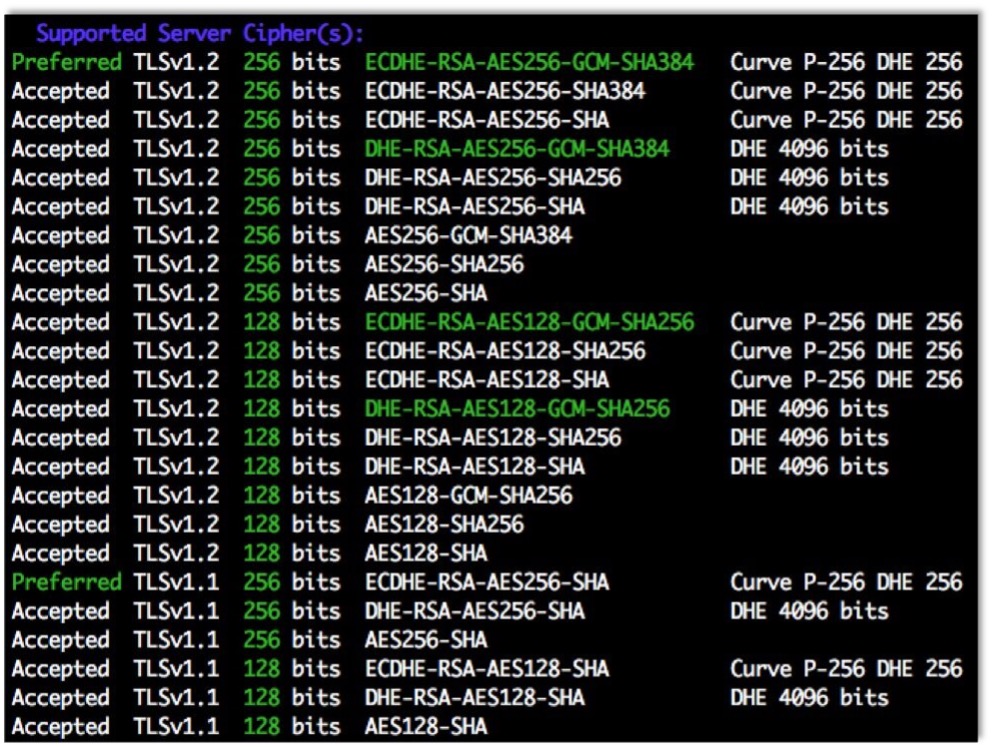

A02:2021 Cryptographic Failures shifts up one position to #2, previously known as Sensitive Data Exposure, which was broad symptom rather than a root cause. The renewed focus here is on failures related to cryptography, which often leads to sensitive data exposure or system compromise.

A03:2021 Injection slides down to the third position. 94% of the applications were tested for some form of injection, and the 33 CWEs mapped into this category have the second most occurrences in applications. Cross-site Scripting is now part of this category in this edition. Raxis published a blog about A03:2021 just after the Top 10 list was released that year. Take a look at 2021 OWASP Top 10 Focus: Injection Attacks for more details.

A04:2021 Insecure Design is a new category for 2021, with a focus on risks related to design flaws. If we genuinely want to “move left” as an industry, it calls for more use of threat modeling, secure design patterns and principles, and reference architectures.

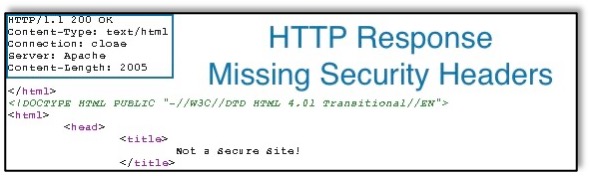

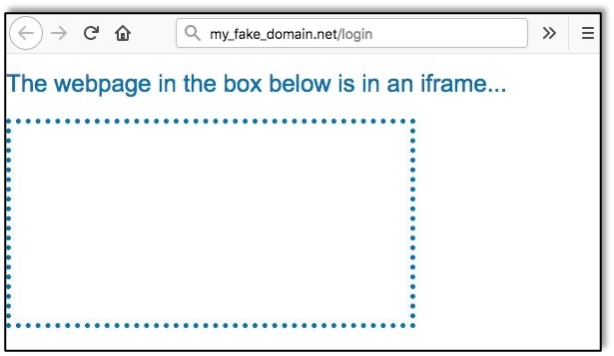

A05:2021 Security Misconfiguration moves up from #6 in the previous edition; 90% of applications were tested for some form of misconfiguration. With more shifts into highly configurable software, it’s not surprising to see this category move up. The former category for XML External Entities (XXE) is now part of this category.

A06:2021 Vulnerable and Outdated Components was previously titled Using Components with Known Vulnerabilities and is #2 in the Top 10 community survey, but also had enough data to make the Top 10 via data analysis. This category moves up from #9 in 2017 and is a known issue that we struggle to test and assess risk. It is the only category not to have any Common Vulnerability and Exposures (CVEs) mapped to the included CWEs, so a default exploit and impact weights of 5.0 are factored into their scores.

A07:2021 Identification and Authentication Failures was previously Broken Authentication and is sliding down from the second position, and now includes CWEs that are more related to identification failures. This category is still an integral part of the Top 10, but the increased availability of standardized frameworks seems to be helping.

A08:2021 Software and Data Integrity Failures is a new category for 2021, focusing on making assumptions related to software updates, critical data, and CI/CD pipelines without verifying integrity. One of the highest weighted impacts from Common Vulnerability and Exposures/Common Vulnerability Scoring System (CVE/CVSS) data mapped to the 10 CWEs in this category. Insecure Deserialization from 2017 is now a part of this larger category.

A09:2021 Security Logging and Monitoring Failures was previously Insufficient Logging & Monitoring and is added from the industry survey (#3), moving up from #10 previously. This category is expanded to include more types of failures, is challenging to test for, and isn’t well represented in the CVE/CVSS data. However, failures in this category can directly impact visibility, incident alerting, and forensics.

A10:2021 Server-Side Request Forgery is added from the Top 10 community survey (#1). The data shows a relatively low incidence rate with above average testing coverage, along with above-average ratings for Exploit and Impact potential. This category represents the scenario where the security community members are telling us this is important, even though it’s not illustrated in the data at this time.

What This Means for Your Application

Raxis recommends including application security from the initial stages of your SDLC process. The OWASP Top 10 lists are a great starting point to enable developers to do that.

When scheduling your web application, mobile application, and API penetration tests, you can rely on Raxis to use the OWASP Top 10 lists as a guide.